By James Bushell, Sr. Platform Engineer, Azure Lead

Kubernetes is by far the most popular container orchestration tool, yet the complexities of managing the tool have led to the rise of fully-managed Kubernetes services over the past few years.

Although Azure supports multiple container tools, it’s now going all-in on Kubernetes and will deprecate its original offerings this year. The great part about cloud-based managed Kubernetes services like Azure Kubernetes Service (AKS) is that it integrates natively with other Azure services, and you don’t have to worry about managing the availability of your underlying clusters, auto scaling, or patching your underlying VMs.

In this blog post, we’ll be reviewing the basics of Kubernetes and AKS, before diving into a real-life use case with AKS.

-

- What is Kubernetes?

- Why use Kubernetes?

- Azure Kubernetes Service (AKS) Deep Dive

- Real-Life AKS Deployment

Basics of Kubernetes

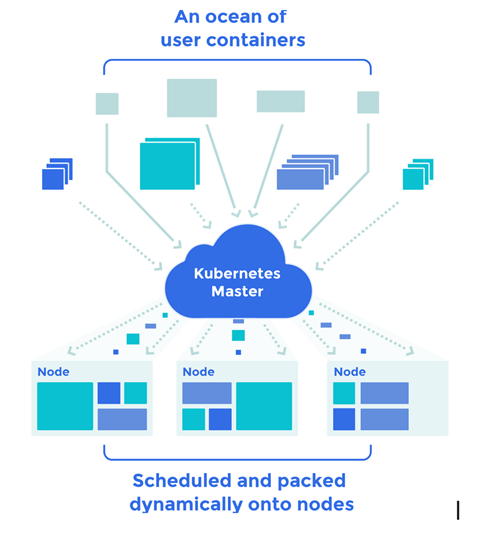

Kubernetes is a portable, extensible, open source platform for container orchestration. It allows developers and engineers to manage containerized workloads and services through both declarative configuration and automation.

Basic benefits of Kubernetes include:

-

- Run distributed systems resiliently

- Automatically mount a storage system

- Automated rollouts and rollbacks

- Self-healing

- Secret and configuration management

Key Terms

API Server: Exposes the underlying Kubernetes API. This is how various management tools interact with the Kubernetes cluster

Controller Manager: Watches the state of the cluster through API server and when necessary makes changes attempting to move the current state towards the desired state.

Etcd: Highly available key value store which maintains the Kubernetes cluster state.

Scheduler: Schedules unassigned pods to nodes. Determines the most optimal node to run your pod

Node: A physical or virtual machine which is where Kubernetes runs your containers.

Kube-proxy: A network proxy that proxies requests to Kubernetes services and their backend pods

Pods: One or more containers logically grouped together. Usually they need to share the same resources.

Kublet: Agent that processes orchestration requests and handles starting pods that have been assigned to its node by the scheduler

Why Use Kubernetes?

When running containers in a production environment, containers need to be managed to ensure they are operating as expected in an effort to ensure there is no downtime.

- Container Orchestration: Without container orchestration, If a container was to go down and stop working, an engineer would need to know the container has failed and manually start a new one. Wouldn’t it be better if this was handled automatically by its own system? Kubernetes provides a robust declarative framework to run your containerized applications and services resiliently.

- Cloud Agnostic: Kubernetes has been designed and built to be used anywhere (public/private/hybrid clouds)

- Prevents Vendor Lock-In: Your containerized application and Kubernetes manifests will run the same way on any platform with minimal changes

- Increase Developer Agility and Faster Time-to-Market: Spend less time scripting deployment workflows and focus on developing. Kubernetes provides a declarative configuration which allows engineers to define how their service is to be ran by Kubernetes, Kubernetes will then ensure the state of the application is maintained

- Cloud Aware: Kubernetes understands and supports a number of various clouds such as Google Cloud, Azure, AWS. This allows Kubernetes to instantiate various public cloud based resources, such as instances, VMs, load balancers, public IPs, storage..etc.

Basics of Azure Kubernetes Services

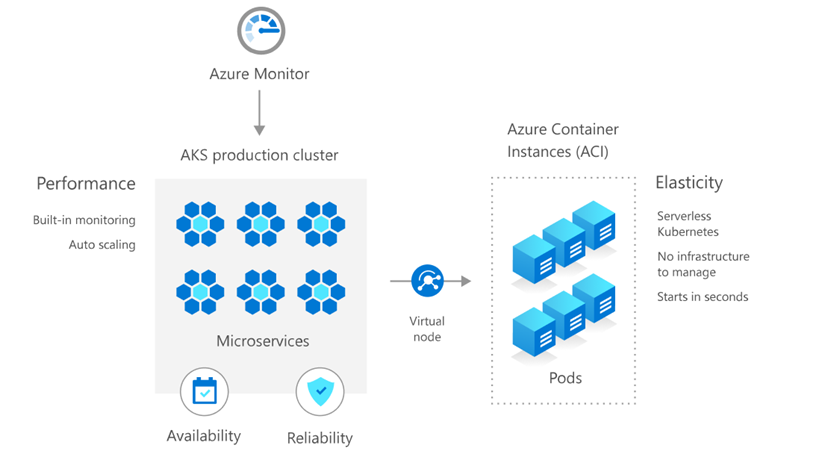

Azure Kubernetes Service (AKS) is a fully-managed service that allows you to run Kubernetes in Azure without having to manage your own Kubernetes clusters. Azure manages all the complex parts of running Kubernetes, and you can focus on your containers. Basic features include:

-

- Pay only for the nodes (VMs)

- Easier cluster upgrades

- Integrated with various Azure and OSS tools and services

- Kubernetes RBAC and Azure Active Directory Integration

- Enforce rules defined in Azure Policy across multiple clusters

- Kubernetes can scale your Nodes using cluster autoscaler

- Expand your scale even greater by scheduling your containers on Azure Container Instances

Azure Kubernetes Best Practices

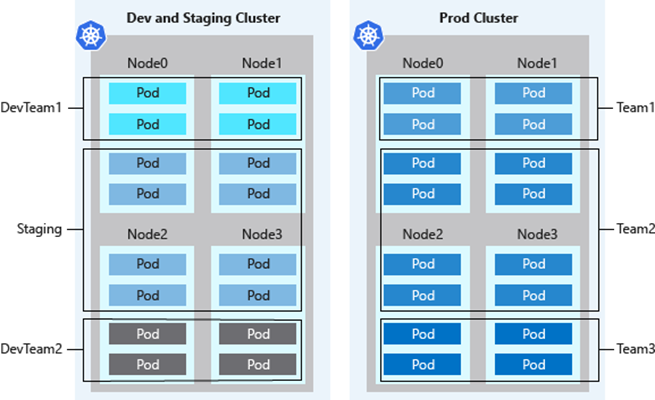

Cluster Multi-Tenancy

-

- Logically isolate clusters to separate teams and projects in an effort to try to minimize the number of physical AKS clusters you deploy

- Namespace allows you to isolate inside of a Kubernetes cluster

- Same best practices with hub-spoke but you do it within the Kubernetes cluster itself

Scheduling and Resource Quotas

-

- Enforce resource quotas – Plan out and apply resource quotas at the namespace level

- Plan for availability

- Define pod disruption budgets

- Limit resource intensive applications – Apply taints and tolerations to constrain resource intensive applications to specific nodes

Cluster Security

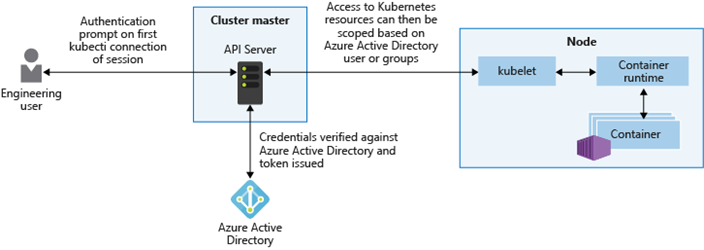

Azure AD and Kubernetes RBAC integration

-

- Bind your Kubernetes RBAC roles with Azure AD Users/Groups

- Grant your Azure AD users or groups access to Kubernetes resources within a namespace or across a cluster

Kubernetes Cluster Updates

-

- Kubernetes releases updates at a quicker pace than more traditional infrastructure platforms. These updates usually include new features, and bug or security fixes.

- AKS supports four minor versions of Kubernetes

- Upgrading AKS clusters are as simple as executing a Azure CLI command. AKS handles a graceful upgrade by safely cordon and draining old nodes in order to minimize disruption to running applications. Once new nodes are up and containers are running, old nodes are deleted by AKS.

Node Patching

Linux

AKS automatically checks for kernel and security updates on a nightly basis and if available AKS will install them on Linux nodes. If a reboot is required, AKS will not automatically reboot the node, a best practice for patching Linux nodes is to leverage the kured (Kubernetes Reboot Daemon) which looks for the existence of /var/run/reboot-required file (created when a reboot is required) and will automatically reboot during a predefined scheduled time.

Windows

The process for patching Windows nodes is slightly different. Patches aren’t applied on a daily basis like Linux nodes. Windows nodes must be updated by performing an AKS upgrade which creates new nodes on the latest base Windows Server image and patches.

Pod Identities

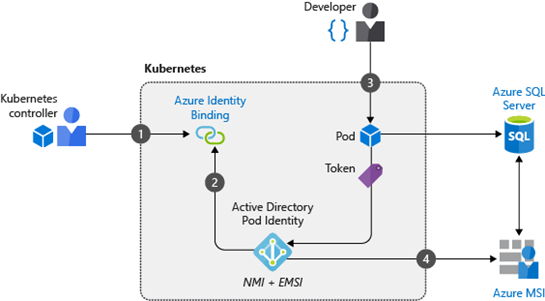

If your containers require access to the ARM API, there is no need to provide fixed credentials that must be rotated periodically. Azure’s pod identities solution can be deployed to your cluster which allows your containers to dynamically acquire access to Azure API and services through the use of Managed Identities (marked Azure MSI in the diagram below).

Limit container access

Avoid creating applications and containers that require escalated privileges or root access.

Monitoring

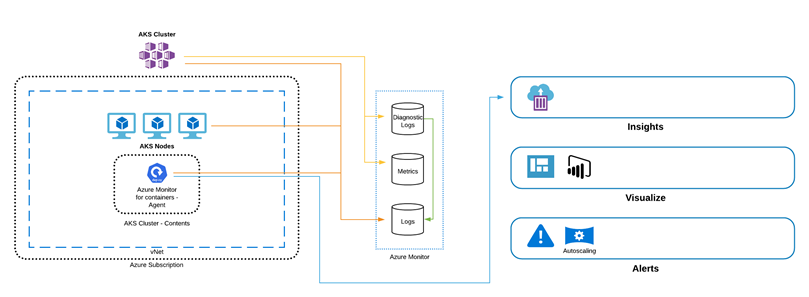

As AKS is already integrated with other Azure services, you can use Azure Monitor to monitor containers in AKS.

-

- Toggled based implementation, can be enabled after the fact or enforced via Azure Policy

- Multi and Cluster specific views

- Integrates with Log Analytics

- Ability to query historic data

- Analyze your Cluster, Nodes, Controllers, and Containers

- Alert on Cluster & Container performance by writing customizable Log Analytics search queries

- Integrate Application logging and exception handling with Application Insights

Real Life Example

Logicworks is a Microsoft Azure Gold Partner that helps companies migrate their applications to Azure. In the example below, one of our customers was looking to deploy and scale their public-facing web application on AKS in order to solve for the following business use case:

-

- Achieve portability across on-prem and public clouds

- Accelerate containerized application development

- Unify development and operational teams on a single platform

- Take advantage of native integration into the Azure ecosystem to easily achieve:

- Enterprise-Grade Security

- Azure Active Directory integration

- Track, validate, and enforce compliance across Azure estate and AKS clusters

- Hardened OS images for nodes

- Operational Excellence

- Achieve high availability and fault tolerance through the use of availability zones

- Elastically provision compute capacity without needing to automate and manage underlying infrastructure.

- Gain insight into and visibility into your AKS environment through automatically configured control plane telemetry, log aggregation, and container health

- Enterprise-Grade Security

The customer’s architecture includes a lot of the common best practices to ensure we can meet the customers business and operational requirements:

Cluster Multi-Tenancy

SDLC environments are split across two clusters isolating Production from lower level SDLC environments such as dev/stage. The use of namespaces provides the same operation benefits while saving cost and operational complexity by not deploying an AKS cluster per SDLC environment.

Scheduling and Resource Quotas

Since multiple SDLC environments and other applications share the same cluster, it’s imperative that scheduling and resource quotas are established to ensure applications and the services they depend on get the resources required for operation. When combined with cluster autoscaler we can ensure that our applications get the resources they need and that compute infrastructure is scaled in when they need it.

Azure AD integration

Leverages Azure AD to authenticate/authorize users to access and initiate CRUD (create, update, and delete) operations against AKS clusters. AAD integration makes it convenient and easy to unify layers of authentication (Azure and Kubernetes) and provide the right personnel with the level of access they require to meet their responsibilities while adhering to principle of least privilege

Pod Identities

Instead of hardcoding static credentials within our containers, Pod Identity is deployed into the default namespace and dynamically assigns Managed Identities to the appropriate pods determined by label. This provides our example application the ability to write to Cosmos DB and our CI/CD pipelines the ability to deploy containers to production and stage clusters.

Ingress Controller

Ingress controllers bring traffic into the AKS cluster by creating ingress rules and routes, providing application services with reverse proxying, traffic routing/load balancing, and TLS termination. This allows us to evenly distribute traffic across our application services to ensure scalability and meet reliability requirements.

Monitoring

Naturally, monitoring the day-to-day performance and operations of our AKS clusters is key to maintaining uptime and proactively solving potential issues. Using AKS’ toggle-based implementation, application services hosted on the AKS cluster can easily be monitored and debugged using Azure Monitor.

Summary

Azure Kubernetes Service is a powerful service for running containers in the cloud. Best of all, you only pay for the VMs and other resources consumed, not for AKS itself, so it’s easy to try out. With the best practices described in this post and the AKS Quickstart, you should be able to launch a test cluster in under an hour and see the benefits of AKS for yourself.

Need help architecting or managing an application on Azure Kubernetes Service? Contact us or learn more about our Azure Migration Service.

No Comments